教程同样适用与ubuntu22.04、ubuntu20.04。如果您对tensorrt不是很熟悉,请务必保持下面库版本一致。请注意,Linux系统安装以下库,务必去进入系统bios下,关闭安全启动(设置 secure boot 为 disable)。tensorrt依赖cuda、cudnn,本文也会给出安装办法,顺便opencv的安装方法也给了。最后,也会分享如何在书写cmakelists文件,以便在项目中使用tensorrt。

1.1 安装工具链和opencv sudo apt-get update sudo apt-get install build-essential sudo apt-get install git sudo apt-get install gdb sudo apt-get install cmake sudo apt-get install libopencv-dev # pkg-config --modversion opencv 1.2 安装Nvidia相关库注:Nvidia相关网站需要注册账号。

1.2.1 安装Nvidia显卡驱动 ubuntu-drivers devices sudo add-apt-repository ppa:graphics-drivers/ppa sudo apt update sudo apt install nvidia-driver-470-server # for ubuntu18.04 nvidia-smi 1.2.2 安装 cuda11.3 进入链接: https://developer.nvidia.com/cuda-toolkit-archive选择:CUDA Toolkit 11.3.0(April 2021)选择:[Linux] -> [x86_64] -> [Ubuntu] -> [18.04] ->[runfile(local)]在网页你能看到下面安装命令,我这里已经拷贝下来: wget https://developer.download.nvidia.com/compute/cuda/11.3.0/local_installers/cuda_11.3.0_465.19.01_linux.run sudo sh cuda_11.3.0_465.19.01_linux.run

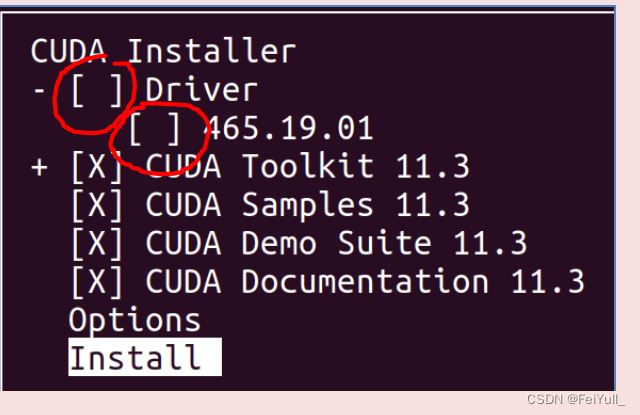

cuda的安装过程中,需要你在bash窗口手动作一些选择,这里选择如下:

select:[continue] -> [accept] ->接着按下回车键取消Driver和465.19.01这个选项,如下图(it is important!) ->[Install]

bash窗口提示如下表示安装完成 #=========== #= Summary = #=========== #Driver: Not Selected #Toolkit: Installed in /usr/local/cuda-11.3/ #......

把cuda添加到环境变量:

vim ~/.bashrc把下面拷贝到 .bashrc里面

# cuda v11.3 export PATH=/usr/local/cuda-11.3/bin${PATH:+:${PATH}} export LD_LIBRARY_PATH=/usr/local/cuda-11.3/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}} export CUDA_HOME=/usr/local/cuda-11.3刷新环境变量和验证

source ~/.bashrc nvcc -Vbash窗口打印如下信息表示cuda11.3安装正常

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Sun_Mar_21_19:15:46_PDT_2021

Cuda compilation tools, release 11.3, V11.3.58

Build cuda_11.3.r11.3/compiler.29745058_0

1.2.3 安装 cudnn8.2 进入网站:https://developer.nvidia.com/rdp/cudnn-archive选择: Download cuDNN v8.2.0 (April 23rd, 2021), for CUDA 11.x选择: cuDNN Library for Linux (x86_64)你将会下载这个压缩包: “cudnn-11.3-linux-x64-v8.2.0.53.tgz” # 解压 tar -zxvf cudnn-11.3-linux-x64-v8.2.0.53.tgz

将cudnn的头文件和lib拷贝到cuda11.3的安装目录下:

sudo cp cuda/include/cudnn.h /usr/local/cuda/include/ sudo cp cuda/lib64/libcudnn* /usr/local/cuda/lib64/ sudo chmod a+r /usr/local/cuda/include/cudnn.h sudo chmod a+r /usr/local/cuda/lib64/libcudnn* 1.2.4 下载 tensorrt8.4.2.4本教程中,tensorrt只需要下载\、解压即可,不需要安装。

进入网站: https://developer.nvidia.cn/nvidia-tensorrt-8x-download网站更新2023.12:https://developer.nvidia.com/nvidia-tensorrt-8x-download

(顺便,法克 Nvidia)把这个打勾: I Agree To the Terms of the NVIDIA TensorRT License Agreement选择: TensorRT 8.4 GA Update 1选择: TensorRT 8.4 GA Update 1 for Linux x86_64 and CUDA 11.0, 11.1, 11.2, 11.3, 11.4, 11.5, 11.6 and 11.7 TAR Package你将会下载这个压缩包: “TensorRT-8.4.2.4.Linux.x86_64-gnu.cuda-11.6.cudnn8.4.tar.gz” # 解压 tar -zxvf TensorRT-8.4.2.4.Linux.x86_64-gnu.cuda-11.6.cudnn8.4.tar.gz # 快速验证一下tensorrt+cuda+cudnn是否安装正常 cd TensorRT-8.4.2.4/samples/sampleMNIST make cd ../../bin/

导出tensorrt环境变量(it is important!),注:将LD_LIBRARY_PATH:后面的路径换成你自己的!后续编译onnx模型的时候也需要执行下面第一行命令

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/xxx/temp/TensorRT-8.4.2.4/lib ./sample_mnistbash窗口打印类似如下图的手写数字识别表明cuda+cudnn+tensorrt安装正常

由于tensorrt依赖cuda cudnn,所以我们先新建common.cmake文件,如下,并在文件中声明相关库的头文件、lib路径等。

# set set(CMAKE_CXX_FLAGS"${CMAKE_CXX_FLAGS} -Wno-deprecated-declarations") # find thirdparty find_package(CUDA REQUIRED) list(APPEND ALL_LIBS ${CUDA_LIBRARIES} ${CUDA_cublas_LIBRARY} ${CUDA_nppc_LIBRARY} ${CUDA_nppig_LIBRARY} ${CUDA_nppidei_LIBRARY} ${CUDA_nppial_LIBRARY}) # include cuda's header list(APPEND INCLUDE_DRIS ${CUDA_INCLUDE_DIRS}) set(TensorRT_ROOT /home/xxxxxx/TensorRT-8.4.2.4) find_library(TRT_NVINFER NAMES nvinfer HINTS ${TensorRT_ROOT} PATH_SUFFIXES lib lib64 lib/x64) find_library(TRT_NVINFER_PLUGIN NAMES nvinfer_plugin HINTS ${TensorRT_ROOT} PATH_SUFFIXES lib lib64 lib/x64) find_library(TRT_NVONNX_PARSER NAMES nvonnxparser HINTS ${TensorRT_ROOT} PATH_SUFFIXES lib lib64 lib/x64) find_library(TRT_NVCAFFE_PARSER NAMES nvcaffe_parser HINTS ${TensorRT_ROOT} PATH_SUFFIXES lib lib64 lib/x64) find_path(TENSORRT_INCLUDE_DIR NAMES NvInfer.h HINTS ${TensorRT_ROOT} PATH_SUFFIXES include) list(APPEND ALL_LIBS ${TRT_NVINFER} ${TRT_NVINFER_PLUGIN} ${TRT_NVONNX_PARSER} ${TRT_NVCAFFE_PARSER}) # include tensorrt's headers list(APPEND INCLUDE_DRIS ${TENSORRT_INCLUDE_DIR}) set(SAMPLES_COMMON_DIR ${TensorRT_ROOT}/samples/common) list(APPEND INCLUDE_DRIS ${SAMPLES_COMMON_DIR}) message(STATUS ***INCLUDE_DRIS*** = ${INCLUDE_DRIS}) message(STATUS"ALL_LIBS: ${ALL_LIBS}")有一点需要特别注意,上述文件中set(TensorRT_ROOT /home/xxxxxx/TensorRT-8.4.2.4)表示设置tensorrt的路径,你修改为自己的实际路径就行,下面再新建CMakeLists.txt文件,在第5行文件中会包含上述common.cmake文件,你根据自己实际情况修改路径。

这样就能将app_yolov8.cpp和一堆其他的.cpp和.cu文件包含进工程,其中main函数在app_yolov8.cpp中。

上述的两个文件分别参考:

common.cmake : https://github.com/FeiYull/TensorRT-Alpha/blob/main/cmake/common.cmake

CMakeLists.txt:https://github.com/FeiYull/TensorRT-Alpha/blob/main/yolov8/CMakeLists.txt