课内实践作业 车牌识别

一、数据集介绍1.车牌识别数据集:VehicleLicense车牌识别数据集包含16151张单字符数据,所有的单字符均为严格切割且都转换为黑白二值图像(如下第一行:训练数据所示)。

2.characterData:车牌识别数据集

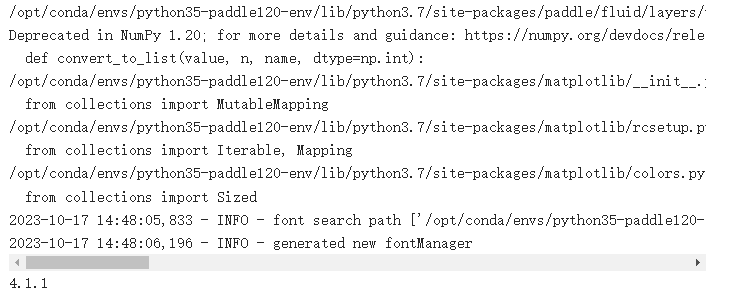

代码如下(示例):

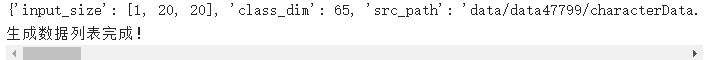

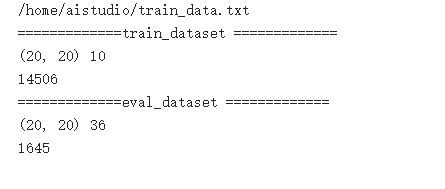

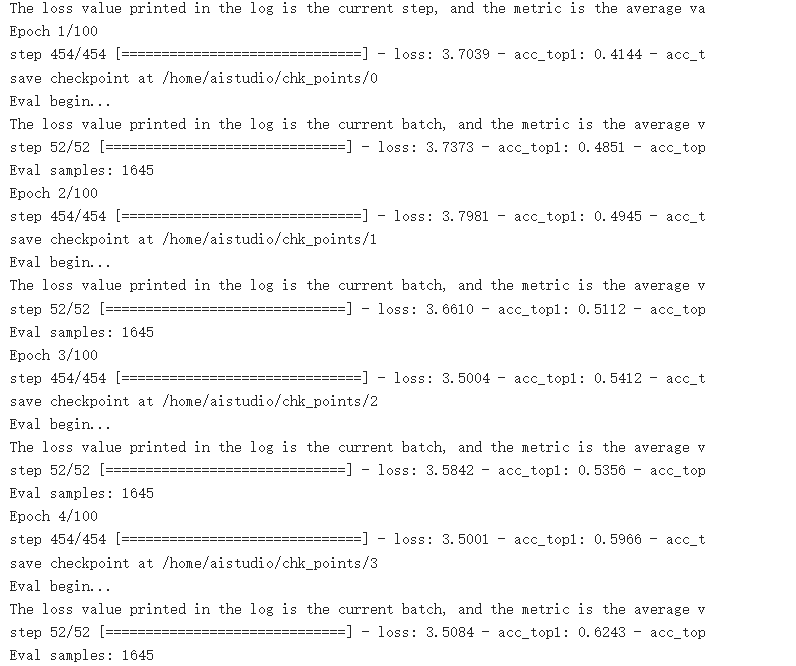

#导入需要的包 import os import zipfile import random import json import cv2 import numpy as np from PIL import Image import paddle import matplotlib.pyplot as plt print(cv2.__version__) 2.参数配置 ''' 参数配置 ''' train_parameters = {"input_size": [1, 20, 20], #输入图片的shape"class_dim": -1, #分类数"src_path":"data/data47799/characterData.zip", #原始数据集路径"target_path":"/home/aistudio/data/dataset", #要解压的路径"train_list_path":"/home/aistudio/train_data.txt", #train_data.txt路径"eval_list_path":"/home/aistudio/val_data.txt", #eval_data.txt路径"label_dict":{}, #标签字典"readme_path":"/home/aistudio/data/readme.json", #readme.json路径"train_batch_size": 32 #训练的轮数 } 3.数据处理 def unzip_data(src_path,target_path): ''' 解压原始数据集,将src_path路径下的zip包解压至data/dataset目录下 ''' if(not os.path.isdir(target_path)): z = zipfile.ZipFile(src_path, 'r') z.extractall(path=target_path) z.close() else: print("文件已解压") `` ```py def get_data_list(target_path,train_list_path,eval_list_path): ''' 生成数据列表 ''' #存放所有类别的信息 class_detail = [] #获取所有类别保存的文件夹名称 data_list_path=target_path class_dirs = os.listdir(data_list_path) if '__MACOSX' in class_dirs: class_dirs.remove('__MACOSX') # #总的图像数量 all_class_images = 0 # #存放类别标签 class_label=0 # #存放类别数目 class_dim = 0 # #存储要写进eval.txt和train.txt中的内容 trainer_list=[] eval_list=[] #读取每个类别 for class_dir in class_dirs: if class_dir !=".DS_Store": class_dim += 1 #每个类别的信息 class_detail_list = {} eval_sum = 0 trainer_sum = 0 #统计每个类别有多少张图片 class_sum = 0 #获取类别路径 path = os.path.join(data_list_path,class_dir) # print(path) # 获取所有图片 img_paths = os.listdir(path) for img_path in img_paths: # 遍历文件夹下的每个图片 if img_path =='.DS_Store': continue name_path = os.path.join(path,img_path) # 每张图片的路径 if class_sum % 10 == 0: # 每10张图片取一个做验证数据 eval_sum += 1 # eval_sum为测试数据的数目 eval_list.append(name_path +"\t%d"% class_label +"\n") else: trainer_sum += 1 trainer_list.append(name_path +"\t%d"% class_label +"\n")#trainer_sum测试数据的数目 class_sum += 1 #每类图片的数目 all_class_images += 1 #所有类图片的数目 # 说明的json文件的class_detail数据 class_detail_list['class_name'] = class_dir #类别名称 class_detail_list['class_label'] = class_label #类别标签 class_detail_list['class_eval_images'] = eval_sum #该类数据的测试集数目 class_detail_list['class_trainer_images'] = trainer_sum #该类数据的训练集数目 class_detail.append(class_detail_list) #初始化标签列表 train_parameters['label_dict'][str(class_label)] = class_dir class_label += 1 #初始化分类数 train_parameters['class_dim'] = class_dim print(train_parameters) #乱序 random.shuffle(eval_list) with open(eval_list_path, 'a') as f: for eval_image in eval_list: f.write(eval_image) #乱序 random.shuffle(trainer_list) with open(train_list_path, 'a') as f2: for train_image in trainer_list: f2.write(train_image) # 说明的json文件信息 readjson = {} readjson['all_class_name'] = data_list_path #文件父目录 readjson['all_class_images'] = all_class_images readjson['class_detail'] = class_detail jsons = json.dumps(readjson, sort_keys=True, indent=4, separators=(',', ': ')) with open(train_parameters['readme_path'],'w') as f: f.write(jsons) print ('生成数据列表完成!') ''' 参数初始化 ''' src_path=train_parameters['src_path'] target_path=train_parameters['target_path'] train_list_path=train_parameters['train_list_path'] eval_list_path=train_parameters['eval_list_path'] batch_size=train_parameters['train_batch_size'] ''' 解压原始数据到指定路径 ''' unzip_data(src_path,target_path) #每次生成数据列表前,首先清空train.txt和eval.txt with open(train_list_path, 'w') as f: f.seek(0) f.truncate() with open(eval_list_path, 'w') as f: f.seek(0) f.truncate() #生成数据列表 get_data_list(target_path,train_list_path,eval_list_path) ''' 自定义数据集 ''' import paddle from paddle.io import Dataset class MyDataset(Dataset):"""步骤一:继承paddle.io.Dataset类"""def __init__(self, mode='train'):"""步骤二:实现构造函数,定义数据集大小"""self.data = [] self.label = [] super(MyDataset, self).__init__() if mode == 'train': #批量读入数据 print(train_list_path) with open(train_list_path, 'r') as f: lines = [line.strip() for line in f] for line in lines: #分割数据和标签 img_path, lab = line.strip().split('\t') #读入图片 img = cv2.imread(img_path) img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) img = np.array(img).astype('float32') #图片归一化处理 img = img/255.0 #将数据同一添加到data和label中 self.data.append(img) self.label.append(np.array(lab).astype('int64')) else: #测试集同上 with open(eval_list_path, 'r') as f: lines = [line.strip() for line in f] for line in lines: img_path, lab = line.strip().split('\t') img = cv2.imread(img_path) img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) img = np.array(img).astype('float32') img = img/255.0 self.data.append(img) self.label.append(np.array(lab).astype('int64')) def __getitem__(self, index):"""步骤三:实现__getitem__方法,定义指定index时如何获取数据,并返回单条数据(训练数据,对应的标签)"""#返回单一数据和标签 data = self.data[index] label = self.label[index] #注:返回标签数据时必须是int64 # print(data) return data, np.array(label, dtype='int64') def __len__(self):"""步骤四:实现__len__方法,返回数据集总数目"""#返回数据总数 return len(self.label) # 测试定义的数据集 train_dataset = MyDataset(mode='train') eval_dataset = MyDataset(mode='val') print('=============train_dataset =============') #输出数据集的形状和标签 print(train_dataset.__getitem__(1)[0].shape,train_dataset.__getitem__(1)[1]) #输出数据集的长度 print(train_dataset.__len__()) print('=============eval_dataset =============') #输出数据集的形状和标签 for data, label in eval_dataset: print(data.shape, label) break #输出数据集的长度 print(eval_dataset.__len__()) 4.模型定义 import paddle.fluid as fluid from paddle.fluid.dygraph import Linear class MyDNN(fluid.dygraph.Layer): def __init__(self): super(MyDNN,self).__init__() ####请完善网络模型定义 # self.hidden1 = paddle.nn.Linear(in_features=20*20,out_deatures=200) # self.hidden2 = paddle.nn.Linear(in_features=200,out_deatures=100) # self.hidden3 = paddle.nn.Linear(in_features=100,out_deatures=100) # self.out = paddle.nn.Linear(in_features=100,out_deatures=65) self.hidden1 = Linear(20*20,200,act='relu') self.hidden2 = Linear(200,100,act='relu') self.hidden3 = Linear(100,100,act = 'relu') self.hidden4 = Linear(100,65,act='softmax') # forward 定义执行实际运行时网络的执行逻辑 def forward(self,input): #-1 表示这个维度的值是从x的元素总数和剩余维度推断出来的,有且只能有一个维度设置为-1 x = fluid.layers.reshape(input, shape=[-1,20*20]) x=self.hidden1(x) x=self.hidden2(x) x=self.hidden3(x) y=self.hidden4(x) return y 5.模型训练 #step3:训练模型 # 用Model封装模型 model = paddle.Model(MyDNN()) # 定义损失函数 model.prepare(paddle.optimizer.Adam(parameters=model.parameters()),paddle.nn.CrossEntropyLoss(),paddle.metric.Accuracy(topk=(1,5))) # model.prepare(paddle.optimizer.SGD(parameters=model.parameters()),paddle.nn.CrossEntropyLoss(),paddle.metric.Accuracy(topk=(1,5))) # 训练可视化VisualDL工具的回调函数 visualdl = paddle.callbacks.VisualDL(log_dir='visualdl_log') # 启动模型全流程训练 model.fit(train_dataset, # 训练数据集 eval_dataset, # 评估数据集 epochs=100, # 总的训练轮次 batch_size = batch_size, # 批次计算的样本量大小 shuffle=True, # 是否打乱样本集 verbose=1, # 日志展示格式 save_dir='./chk_points/', # 分阶段的训练模型存储路径 callbacks=[visualdl]) # 回调函数使用 #保存模型 model.save('model_save_dir') license_plate = cv2.imread('work/车牌.png') print(license_plate.shape) gray_plate = cv2.cvtColor(license_plate, cv2.COLOR_RGB2GRAY) ret, binary_plate = cv2.threshold(gray_plate, 175, 255, cv2.THRESH_BINARY) #ret:阈值,binary_plate:根据阈值处理后的图像数据 # 按列统计像素分布 result = [] for col in range(binary_plate.shape[1]): result.append(0) for row in range(binary_plate.shape[0]): result[col] = result[col] + binary_plate[row][col]/255 # print(result) #记录车牌中字符的位置 character_dict = {} num = 0 i = 0 while i < len(result): if result[i] == 0: i += 1 else: index = i + 1 while result[index] != 0: index += 1 character_dict[num] = [i, index-1] num += 1 i = index # print(character_dict) #将每个字符填充,并存储 characters = [] for i in range(8): if i==2: continue padding = (170 - (character_dict[i][1] - character_dict[i][0])) / 2 #将单个字符图像填充为170*170 ndarray = np.pad(binary_plate[:,character_dict[i][0]:character_dict[i][1]], ((0,0), (int(padding), int(padding))), 'constant', constant_values=(0,0)) ndarray = cv2.resize(ndarray, (20,20)) cv2.imwrite('work/' + str(i) + '.png', ndarray) characters.append(ndarray) def load_image(path): img = paddle.dataset.image.load_image(file=path, is_color=False) img = img.astype('float32') img = img[np.newaxis, ] / 255.0 return img 将标签进行转换 print('Label:',train_parameters['label_dict']) match = {'A':'A','B':'B','C':'C','D':'D','E':'E','F':'F','G':'G','H':'H','I':'I','J':'J','K':'K','L':'L','M':'M','N':'N', 'O':'O','P':'P','Q':'Q','R':'R','S':'S','T':'T','U':'U','V':'V','W':'W','X':'X','Y':'Y','Z':'Z', 'yun':'云','cuan':'川','hei':'黑','zhe':'浙','ning':'宁','jin':'津','gan':'赣','hu':'沪','liao':'辽','jl':'吉','qing':'青','zang':'藏', 'e1':'鄂','meng':'蒙','gan1':'甘','qiong':'琼','shan':'陕','min':'闽','su':'苏','xin':'新','wan':'皖','jing':'京','xiang':'湘','gui':'贵', 'yu1':'渝','yu':'豫','ji':'冀','yue':'粤','gui1':'桂','sx':'晋','lu':'鲁', '0':'0','1':'1','2':'2','3':'3','4':'4','5':'5','6':'6','7':'7','8':'8','9':'9'} L = 0 LABEL ={} for V in train_parameters['label_dict'].values(): LABEL[str(L)] = match[V] L += 1 print(LABEL) 6.模型预测 with fluid.dygraph.guard(): model=MyDNN()#模型实例化 model_dict,_=fluid.load_dygraph('model_save_dir.pdparams') model.load_dict(model_dict)#加载模型参数 model.eval()#评估模式 lab=[] for i in range(8): if i==2: continue infer_imgs = [] infer_imgs.append(load_image('work/' + str(i) + '.png')) infer_imgs = np.array(infer_imgs) infer_imgs = fluid.dygraph.to_variable(infer_imgs) result=model(infer_imgs) lab.append(np.argmax(result.numpy())) print(lab) display(Image.open('work/车牌.png')) for i in range(len(lab)): print(LABEL[str(lab[i])],end='') 总结通过对实现车牌识别让我对深度神经网络(DNN)有了更深的认识